Charles Gutjahr

We got bad laws because social media shirked its social responsibilities

Children under 16 will be banned from major social media platforms after the Australian parliament passed the Online Safety Amendment bill last week. I think this a bad law which is not actually going to make kids much safer and could make things worse for everyone1.

However I do not blame the government for this misguided law, I put the blame squarely on the big social media platforms. Tech companies should have dealt with the problems their platforms are causing, but instead it feels like they have been shirking their responsibilities. Australians are overwhelmingly angry with them: 77% want kids banned and 87% want greater penalties on social media companies that do not comply with Australian laws. The government was clearly pressured to step in and do something, anything. The result is a law that is bad for those platforms, a law that they could and should have prevented.

I'll give you a personal anecdote about how Facebook has failed me. This is something I reckon Facebook should have solved, and their lack of action has made it inevitable the government would step in.

My Facebook feed is flooded with scam advertisements. There are often times when most advertising I see on Facebook is for scams. They are the same scams that Facebook has been showing me for years and has failed to stop. Not only does Facebook keep showing me those scams, but Facebook also routinely rejects my reports about them.

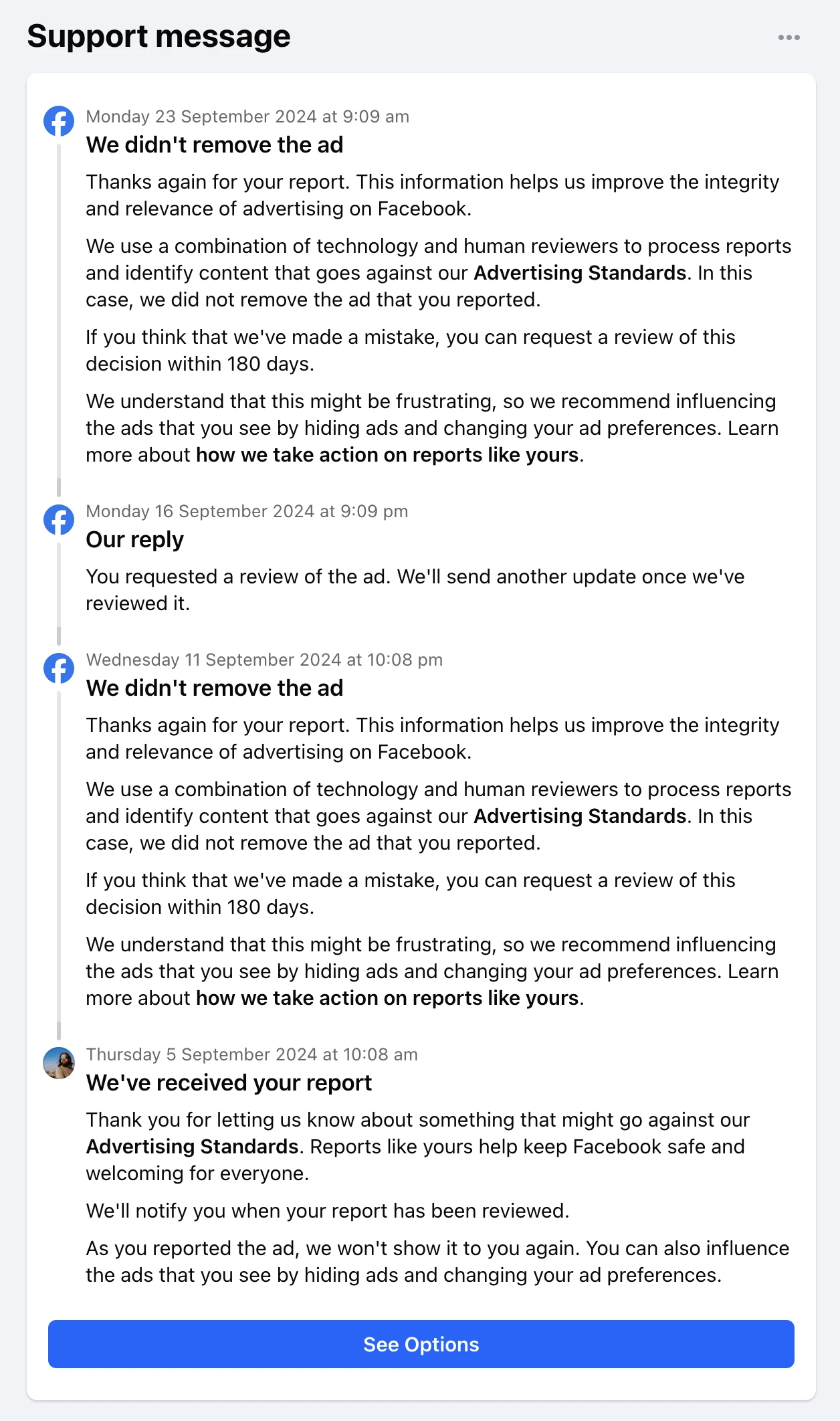

In my last blog post I wrote about on particular fake Paul Hogan scam ad which I reported to Facebook but they didn't remove. Since then I escalated that report by requesting another review of the ad. The second review came back and yet again Facebook did not remove that ad:

This is not an isolated incident. The last 30 scam ads that I've reported to Facebook have not been taken down. The last time Facebook took action on a scam I reported was so long ago that Facebook has since deleted the message from my Support Inbox.

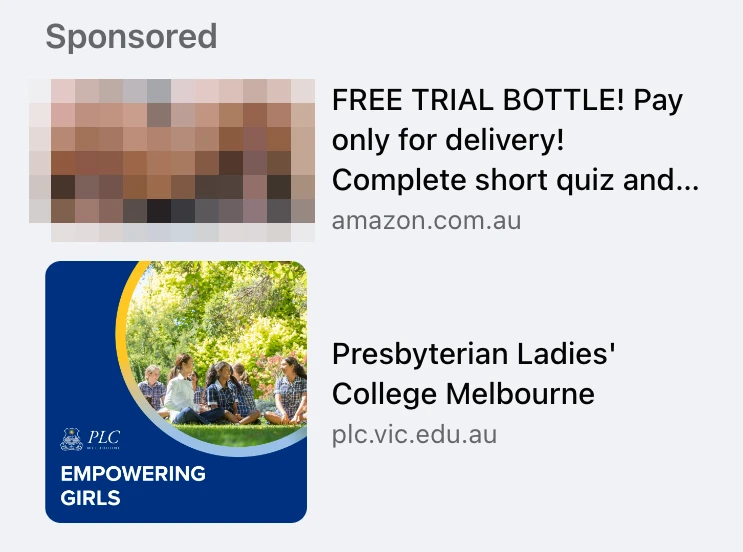

Sometimes instead of showing me scams Facebook shows me advertisements featuring hardcore pornography. Here's an example of one such ad, censored by me so that I am not distributing porn on my own website:

The advertisement that I have pixelated showed a man and woman having sex, with an erect penis and copious amounts of semen. It was a clear breach of Facebook's Advertising Standards, which have specific prohibitions on adult nudity and sexual activity. Facebook displaying this pornographic ad was made even worse by the fact that they placed it next to an ad for a private girl's school.

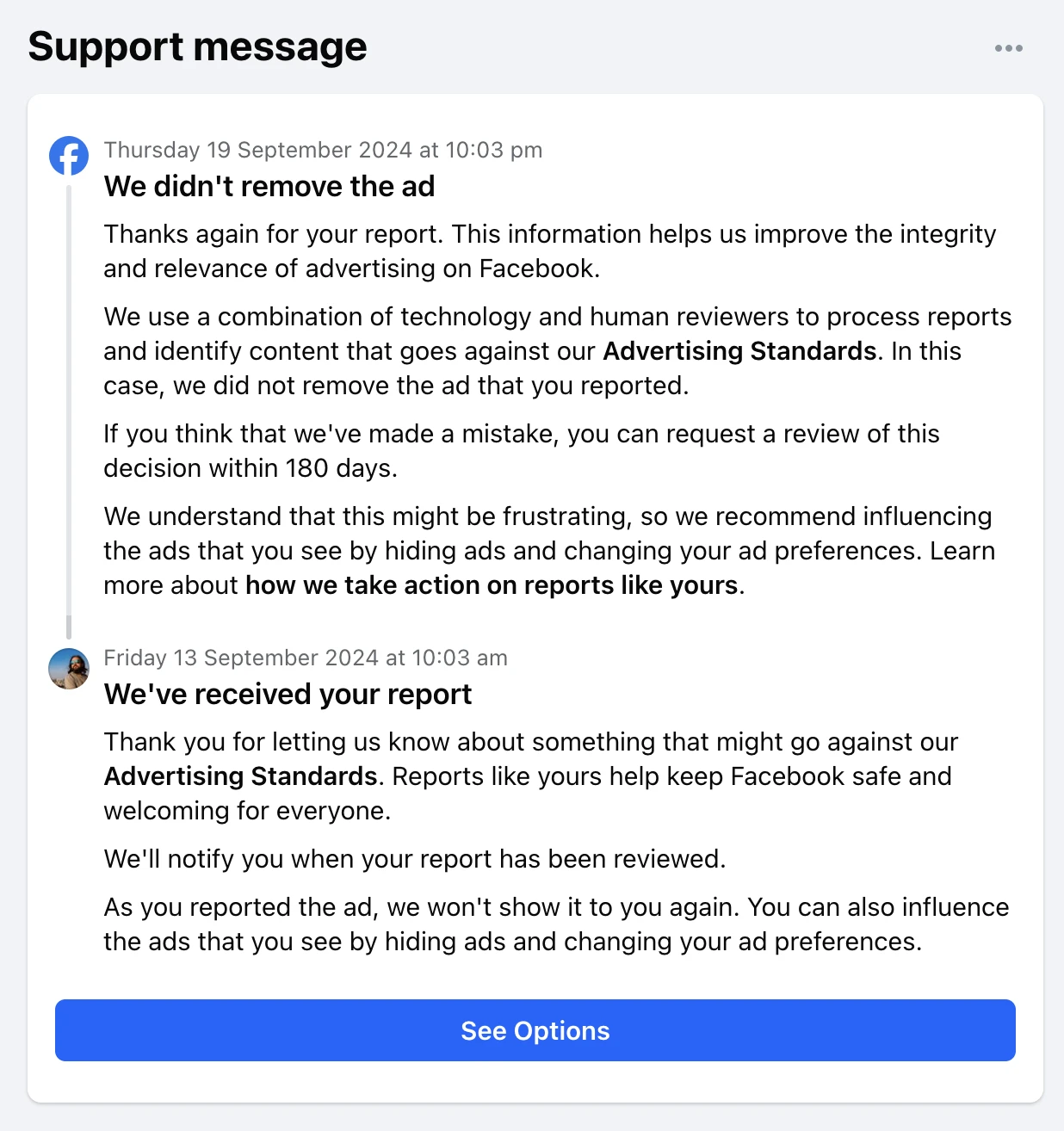

I reported the ad to Facebook but they did not remove it:

Facebook has failed here.

I understand that occasionally things slip through the cracks, but Facebook should take action when such problems are reported. Unfortunately Facebook routinely fails to take action when I report a scam. That is unacceptable to me, and surely to most Australians as well.

What would be acceptable? I can't speak for all Australians but I think a reasonable expectation is that Meta should not allow scam ads or pornographic ads to appear on Facebook in the first place. I reckon Facebook could step up and actually achieve that: simply have a person review each ad before it is published, looking for the obvious scams and porn. Perhaps AI will be helpful to detect scams because Facebook is an AI leader, but if they're using AI to detect scams now it is clearly failing given the prevalence of scams. So I think Facebook really just needs a lot of people to do the reviews. It would be expensive but surely that is better than alienating most of the population and forcing government to ban social media?

Facebook scams are not the only problem on social media and not the main reason behind the government ban on social media—just the one I encounter the most. But those scams are a reasonable illustration of the kinds of problem that social media platforms should be making more of an effort to fix. If they don't fix these things then the government will... and probably badly.

btw. I will be taking my own advice on my new project neighbo.au. You can read about that on the Neighbo blog.

Footnotes

- This blog doesn't focus on why this law is so bad because plenty of other people have done that better than I can. Check out this Mandarin article by Zoe Rose, or Richard Taylor's overview, or Sarah Hanson-Young's dissenting report from the senate inquiry.↩↩